IntroductionThe concept of fuzzy set was introduced by Zadeh in 1965 to allow elements to belong to a set in a gradual rather than an abrupt way (i.e. permitting memberships valued in the interval [0,1] instead of in the set {0,1}). Ever since then, applications and developments based on this simple concept have evolved to such an extent that it is practically impossible nowadays to encounter any area or problem where applications, developments, products, etc. are not based on fuzzy sets.

One important type of problem in particular are optimization problems, which optimize the value that a function may reach on a previously specified set, and these and everything relating to them are covered by the area known as mathematical programming. When fuzzy elements are considered in mathematical programming, fuzzy optimization methods emerge, and these are perhaps one of the most fruitful areas of fuzzy-related knowledge, both from the theoretical and the applied points of view. Yet despite all its methods and models for solving the enormous variety of real practical solutions, as with conventional mathematical programming, it cannot solve every possible situation for while a problem may be expressed in fuzzy terms, it cannot be solved with fuzzy techniques.

The ease of resolving ever larger real problems, the impossibility of discovering exact solutions to these problems in every case, and the need to provide answers to the practical situations considered in a great many cases have lead to the increasing use of heuristic-type algorithms which have proved to be valuable tools capable of providing solutions where exact algorithms are not able to. In recent years, a large catalogue of heuristic techniques has emerged inspired by the principle that satisfaction is better than optimization, or in other words, rather than not being able to provide the optimal solution to a problem, it is better to give a solution which at least satisfies the user in some previously specified way, and these have proved to be extremely effective.

These heuristics are said to have been mostly inspired by nature, society, physics, etc. to produce theoretical models which match the circumstances considered, and from this perspective, it has been possible to solve cases which, until only very recently, were impossible with conventional techniques. In most cases, however, the solutions achieved have not been optimal and are instead “almost optimal”, having been obtained with criteria other than the classic ”achieving the best value of the objective function”, by considering characteristics which have been subjectively established by the decision-maker.

It is well known that when we speak of human subjectivity, or even closeness to an ideal value, the best comparative way of modelling this type of situation is by means of fuzzy sets, or more generally with soft computing methodologies. This method of modelling subjectivity (which is so developed in other fields) has hardly ever been applied to the case of heuristic algorithm design despite all indications that this might well be a very promising approach because in addition to providing solutions which are as close to the optimum as other well-known conventional heuristic ones,

a) they solve the problem in a less costly way than other methods;

b) they generalize already known heuristics; and

c) the hybridization in the soft computing context favours and enriches the appearance of original procedures which can help resolve new problems.

However, while the historic path of fuzzy sets and systems has been much explored, the same cannot be said of soft computing. In order to narrow this gap, we will describe what soft computing is and what is understood by heuristics, and from both concepts we will attempt to find a common ground where the best of both worlds can be combined. There will be results: the first is that there will be soft computing-based metaheuristic procedures which appear to be one of the most promising tools for the effective solution of problems which are as yet impossible to solve, and also for finding solutions which suit the person looking for them; and the second (as a result of the first) is that a new description will emerge of the components which define soft computing and this will further extend the application sphere.

Consequently, next section present the former concept of soft computing and its main classical constituents. Then section 3 focuses on the definition of heuristics and metaheuristics. The review of the soft computing components is carried out in section 4, and in section 5 new hybrid metaheuristics in soft computing are presented and briefly described. The main conclusions and bibliography close the paper.

Soft ComputingPrior to 1994 when Zadeh [2] first defined “soft computing“, the currently-handled concepts used to be referred to in an isolated way, whereby each was spoken of individually with an indication of the use of fuzzy methodologies. Although the idea of establishing the area of soft computing dates back to 1990 [3], it was in [2] that Zadeh established the definition of soft computing in the following terms:

"Basically, soft computing is not a homogeneous body of concepts and techniques. Rather, it is a partnership of distinct methods that in one way or another conform to its guiding principle. At this juncture, the dominant aim of soft computing is to exploit the tolerance for imprecision and uncertainty to achieve tractability, robustness and low solutions cost. The principal constituents of soft computing are fuzzy logic, neurocomputing, and probabilistic reasoning, with the latter subsuming genetic algorithms, belief networks, chaotic systems, and parts of learning theory. In the partnership of fuzzy logic, neurocomputing, and probabilistic reasoning, fuzzy logic is mainly concerned with imprecision and approximate reasoning; neurocomputing with learning and curve-fitting; and probabilistic reasoning with uncertainty and belief propagation".

It is therefore clear that rather than a precise definition for soft computing, it is instead defined by extension, by means of different concepts and techniques which attempt to overcome the difficulties which arise in real problems which occur in a world which is imprecise, uncertain and difficult to categorize.

There have been various subsequent attempts to further hone this definition, with differing results, and among the possible alternative definitions, perhaps the most suitable is the one presented in [4]: "Every computing process that purposely includes imprecision into the calculation on one or more levels and allows this imprecision either to change (decrease) the granularity of the problem, or to "soften" the goal of optimalisation at some stage, is defined as to belonging to the field of soft computing".

The viewpoint that we will consider here (and which we will adopt in future) is another way of defining soft computing, whereby it is considered to be the antithesis of what we might call hard computing. Soft computing could therefore be seen as a series of techniques and methods so that real practical situations could be dealt with in the same way as humans deal with them, i.e. on the basis of intelligence, common sense, consideration of analogies, approaches, etc. In this sense, soft computing is a family of problem-resolution methods headed by approximate reasoning and functional and optimization approximation methods, including search methods. Soft computing is therefore the theoretical basis for the area of intelligent systems and it is evident that the difference between the area of artificial intelligence and that of intelligent systems is that the first is based on hard computing and the second on soft computing.

From this other viewpoint on a second level, soft computing can be then expanded into other components which contribute to a definition by extension, such as the one first given. From the beginning [5], the components considered to be the most important in this second level are probabilistic reasoning, fuzzy logic and fuzzy sets, neural networks, and genetic algorithms (GA), which because of their interdisciplinary, applications and results immediately stood out over other methodologies such as the previously mentioned chaos theory, evidence theory, etc. The popularity of GA, together with their proven efficiency in a wide variety of areas and applications, their attempt to imitate natural creatures (e.g. plants, animals, humans) which are clearly soft (i.e. flexible, adaptable, creative, intelligent, etc.), and especially the extensions and different versions, transform this fourth second-level ingredient into the well-known evolutionary algorithms (EA) which consequently comprise the fourth fundamental component of soft computing, as shown in the following diagram:

From this last conception of soft computing, playing fuzzy sets and fuzzy logic a necessarily basic role, we can describe other areas emerging around it simply by considering some of the possible combinations which can arise:

1. From the first level and beginning with approximate reasoning methods, when we only concentrate on probabilistic models, we encounter the Dempster-Shafer theory and Bayesian networks. However, when we consider probabilistic methods combined with fuzzy logic, and even with some other multi-valued logics, we encounter what we could call hybrid probabilistic models, fundamentally probability theory models for fuzzy events, fuzzy event belief models, and fuzzy influence diagrams.

2. When we look at the developments directly associated with fuzzy logic, fuzzy systems and in particular fuzzy controllers stand out. Then, arising from the combination of fuzzy logic with neural networks and EA are fuzzy logic-based hybrid systems, the foremost exponents of which are fuzzy neural systems, controllers adjusted by neural networks (neural fuzzy systems which differ from the previously mentioned fuzzy neural systems), and fuzzy logic-based controllers which are created and adjusted with EA.

3. Moving through the first level to the other large area covered by soft computing (functional approach/optimization methods) the first component which appears is that of neural networks and their different models. Arising from the interaction with fuzzy logic methodologies and EA methodologies are hybrid neural systems, and in particular fuzzy control of network parameters, and the formal generation and weight generation in neural networks.

4. The fourth typical component of soft computing and perhaps the newest yet possibly most up-to-date is that of EA, and associated with these are four large, important areas: evolutionary strategies, evolutionary programming, GA, and genetic programming. If we were only to focus on these last areas, we could consider that in this case the amalgam of methodologies and techniques associated with soft computing culminate in three important lines: fuzzy genetic systems, bioinspired systems, and applications for the fuzzy control of evolutionary parameters.

On further examination of this last component some additional considerations are needed. Firstly, independently of the broad-minded approach adopted to contemplate what can be embraced by fuzzy genetic systems, bioinspired systems, and fuzzy control applications on evolutionary parameters, other important topics are missing from this description. Secondly, if we are referring in particular to bioinspired systems, it is clear that not only are they the product of fuzzy logic, neural networks or EA (with all the variants that we can consider for these three components) but also that other extremely important methodologies are involved in them.

In the sections which follow we will therefore justify a new definition for soft computing components, which was first referred to in [6], in order to provide a clearer perspective of the different areas that this covers without any loss of essence.

Heuristics and MetaheuristicsAs stated in [7], since the fuzzy boom of the 1990s, methodologies based on fuzzy sets (i.e. soft computing) have become a permanent part of all areas of research, development and innovation, and their application has been extended to all areas of our daily life: health, banking, home, and are also the object of study on different educational levels. Similarly, there is no doubt that thanks to the technological potential that we currently have, computers can handle problems of tremendous complexity (both in comprehension and dimension) in a wide variety of new fields.

As we mentioned above, since the mid 1990s, GA (or EA from a general point of view) have proved to be extremely valuable for finding good solutions to specific problems in these fields, and thanks to their scientific attractiveness, the diversity of their applications and the considerable efficiency of their solutions in intelligent systems, they have been incorporated into the second level of soft computing components.

EA, however, are merely another class of heuristics, or metaheuristics, in the same way as Taboo Search, Simulated Annealing, Hill Climbing, Variable Neighbourhood Search, Estimation Distribution Algorithms (EDA), Scatter Search, GRASP, Reactive Search and very many others are. Generally speaking, all these heuristic algorithms (metaheuristics) usually provide solutions which are not ideal, but which largely satisfy the decision-maker or the user. When these act on the basis that satisfaction is better than optimization, they perfectly illustrate Zadeh’s famous sentence [2] : "...in contrast to traditional hard computing, soft computing exploits the tolerance for imprecision, uncertainty, and partial truth to achieve tractability, robustness, low solution-cost, and better rapport with reality”.

Consequently, among the soft computing components, instead of EA (which can represent only one part of the search and optimization methods used), heuristic algorithms and even metaheuristics should be considered.

There is usually controversy about the difference between metaheuristics and heuristics, and while it is not our intention here to enter into this debate, we are interested in offering a brief reflection on both concepts. The term heuristics comes from the Greek word “heuriskein”, the meaning of which is related to the concept of finding something and is linked to Archimedes’ famous and supposed exclamation, “Eureka!”.

On this basis, a large number of heuristic procedures have been developed to solve specific optimization problems with great success, and the best of these have been extracted and used in other problems or in more extensive contexts. This has contributed to the scientific development of this field of research and to the extension of the application of its results. As a result, metaheuristics have emerged, a term which appeared for the first time in an article by Fred Glover in 1986.

The term metaheuristics derives from the combination of the word heuristics with the prefix meta (meaning beyond or of a higher level), and although there is no formal definition for the term metaheuristics, the following two proposals give a clear representation of the general notion of the term:

a) I. H. Osman and G. Laporte [18]: "An iterative generation process which guides a subordinate heuristic by combining intelligently different concepts for exploring and exploiting the search space".

b) S. Voss et al. [19]: "is an iterative master process that guides and modifies the operations of subordinate heuristics to efficiently produce high quality solutions".

It is therefore clear that metaheuristics are more broad-brush than heuristics. In the sections which follow, we will focus on the concept of metaheuristics, and will start by pointing out that in the terms that we have defined, certain metaheuristics will always be better than others in terms of their performance when it comes to solving problems.

In order to achieve the best performance of the metaheuristics, it is desirable for them to have a series of “good properties” which include simplicity, independence, coherence, effectiveness, efficiency, adaptability, robustness, interactivity, diversity, and autonomy [8]. In view of their definition and the series of desirable characteristics, it is both logical and obvious that EA are to be found among metaheuristics and they are therefore well placed with the other second-level soft computing components to facilitate the appearance of new theoretical and practical methodologies, outlines, and frameworks for a better understanding and handling of generalized imprecision in the real world (as explained in [3]).

A review of Soft Computing ComponentsReturning to the previous description of the components which describe soft computing on the different levels, we could say that the most important second-level components are probabilistic reasoning, fuzzy logic and sets, neural networks and in view of what we have explained, metaheuristics (which would typically encompass EA but would not be confined to these exclusively). The new defining framework for the main methodologies which make up soft computing would therefore be described as in the following diagram:

As we explained before, rather than understanding soft computing methodologies in an isolated way, it is necessary to understand them through the hybridization of their second-level components. Correspondingly, it is perfectly logical for us to explore the new theoretical-practical facets deriving from the appearance of metaheuristics among these components.

There are so many and such a variety of metaheuristics available that it is practically impossible to agree on one universally-accepted way of classifying them. Nevertheless, the hierarchy on which there is the most consensus considers three (or four) foremost groups:

1) metaheuristics for evolutionary procedures based on sets of solutions which evolve according to natural evolution principles.

2) metaheuristics for relaxation methods, problem-solving methods using adaptations of the original model which are easier to resolve.

3) metaheuristics for neighborhood searches, which explore the solution space and exploit neighbourhood structures associated to these solutions.

4) other types of intermediate metaheuristics between the ones mentioned above or derived in some way from them, but which we will not consider because of their great variability (and to avoid dispersion).

We have decided to classify the metaheuristics in this way, and what is at first apparent is that our previous definition of soft computing “by extension” according to its components, not only maintains the essence of Zadeh’s original definition but generalizes and expands it to contemplate new possibilities. In effect, if we were to call these four groups of metaheuristics MH(1), ... MH(4), respectively, the previous diagram could now be represented more explicitly as shown below,

where, due to the fact that there are still classic soft computing components, the different known and studied areas remain as they are, emerging as always when two or more of these components are interrelated with each other. However, as a result of having incorporated new possibilities into the fourth component (metaheuristics), it now makes perfect sense to wait for new hybrid models to appear to be developed.

In order to demonstrate the range of study areas at our disposal when metaheuristics is taken as the base component, in the following sections we will concentrate on describing the hybridizations which arise through the use of the previous categorization.

Hybrid Metaheuristics in Soft ComputingIn this section, we will consider the three main previously mentioned groups of metaheuristics. From these, we will then describe the new metaheuristics which have emerged, briefly dwelling on the less developed or less popular ones because they are more recent.

5.1. Evolutionary Metaheuristics. These metaheuristics are by far the most popular and define mechanisms for developing an evolution in the search space of the sets of solutions in order to come close to the ideal solution with elements which will survive in successive generations of populations. In the context of soft computing, the hybridizations which take these metaheuristics as a reference are fundamental:

Although this is a very important and very wide area (covering everything from fuzzy genetic systems to the adjustment of fuzzy controllers with evolutionary algorithms, in addition to EDA, bioinspired systems, etc.), it is beyond the scope of this article and those interested should refer to ([9, 10, 11]).

5.2. Relaxation metaheuristics. A real problem may be relaxed when it is simplified by eliminating, weakening or modifying one of its characteristic elements. Relaxation metaheuristics are strategies for relaxing the problem in heuristic design, and which are able to find solutions for problems which would otherwise have been very difficult to solve without the use of this methodology. Examples of these are rounding up or down or adjustments in nature, as occurs when an imprecisely and linguistically-expressed quantity is associated to an exact numerical value. From this point of view, a real alternative is to flexibilize exact algorithms, introducing fuzzy stop criteria, which eventually leads to rule-based relaxation metaheuristics; admitting the vagueness of coefficients, justifying algorithms for resolving problems with fuzzy parameters, and relaxing the verification of restrictions, allowing certain violations in their fulfillment:

In order to illustrate some of these metaheuristics more specifically, we will consider algorithms with fuzzy stop criteria [12, 13]. We know that the stop criteria fix the end conditions of an algorithm’s iterative procedure, establishing these criteria from the problem’s theoretical features, from the type of solution being sought, and from the type of algorithm used. If a given algorithm provides the succession (xn) of feasible solutions, some of the most frequent stop criteria are:

a) stop the process after N iterations;

b) stop the process when the relative or absolute distance between two elements in the succession from a certain iteration are less than or equal to a prefixed value;

c) stop the process when a prefixed measure g(xn) satisfies a certain condition such as being less than or equal to a constant.

In short, it can be said that an algorithm determines a reference set and stops when the set specified in the stop criteria has been obtained. The flexibilization of exact algorithms with the introduction of fuzzy stop criteria therefore assumes that the reference set is considered to be a fuzzy set, and the stop criteria are fixed according to the membership degree of the elements.

5.3. Search metaheuristics. Generally speaking, these are probably the most important metaheuristics, and their basic operation consists in establishing strategies for exploring the solution space of the problem and iterating the starting-point solutions. Although at first sight they might appear to be similar to evolutionary searches, they are not since evolutionary searches base their operation on the evolution of a population of individuals in the search space. These metaheuristics are usually described by means of various metaphors, which classify them as bioinspired, sociological, based on nature, etc. and this makes them extremely popular.

However, outside this descriptive framework, given that a search can be made by means of a single search procedure (or by more than one in which case the search methods could either cooperate with each other or not) the search metaheuristic (without this classification being exclusive for this section) can be considered as individual or multiple, allowing in this last case the possibility for different agents to cooperate with each other. The different options which can emerge in the context of soft computing are collected in the following diagram:

Among the best known individual metaheuristics are Hill Climbing, Greedy-like, Multi-start, Variable Neighbourhood, Simulated Annealing, Taboo, … which have their own fuzzy extensions.

Independently of their specific method of action, all these metaheuristics explore the search space according to evaluations of the objective function of the specific problem which is being solved, and this explicitly supposes performing numerical valuations with the help of an objective function in a precisely defined space. Only too often, however, the objective function represents some vaguely established property, and the search space (or the neighborhoods being searched) has no clearly defined boundaries, and this makes it logical to focus the application of these metaheuristics with theoretical elements from the sphere of fuzzy logic and fuzzy sets. It is precisely in this context that FANS-type algorithms emerge [14,15].

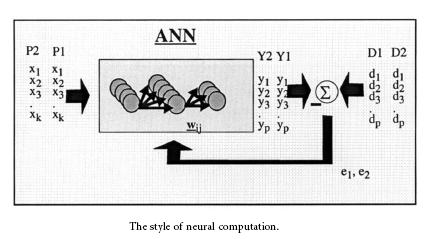

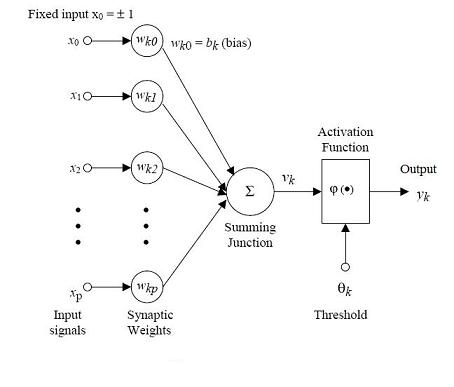

FANS is a neighborhood search method where the solutions are evaluated not only in terms of the objective functions but also through the use of fuzzy properties and concepts which enable qualitative valuations on the solutions. It is also a method which may be adapted to the context since its behaviour varies according to the state of the search through the use of various administrators. FANS is based on four main components (O, FV, OS and NS) and a diagram of the algorithm is shown below to display the interaction between these four components.

If, however, the search procedure is performed using various metaheuristics, there is always the possibility of cooperation between these [16], and therefore the generalization of everything described so far to the context of parallelism, something which is obviously beyond the sphere of this paper but which it is interesting to reflect on since with the proliferation of parallel computing, more powerful work stations and faster communication networks, parallel implementations of metaheuristics have emerged as something natural and provide an interesting alternative for increasing the speed of the search for solutions. Various strategies have correspondingly been proposed and applied and these have proved to be very efficient for resolving large-scale problems and for finding better solutions than those of their sequential counterparts due to the division of the search space, or because they have improved the intensification and diversification of the search. As a result, parallelism (and therefore multiple metaheuristics) not only constitutes a way of reducing the execution times of individual metaheuristics, but also of improving their effectiveness and robustness.

In the soft computing framework , the basic idea which has been developed so far has consisted in supposing that there is a set of resolving agents [17] which are basically algorithms for solving combinatorial optimization problems, and to execute them cooperatively by means of a coordinating agent to solve the problem in question, taking the generality based on minimum knowledge of a problem as a fundamental premise. Each solving agent acts autonomously and only communicates with a coordinating agent to send it the solutions as it finds them and to receive guidelines about how to proceed. The coordinating agent receives the solutions found by each solving agent for the problem, and following a fuzzy rule base to model its behaviour, it creates the guidelines which it then sends to them, thereby taking total control of the strategy.

ConclusionThe concept of fuzzy set has been and is a paradigm in the scientific-technological world with important repercussions in all social sectors because of the diversity of its applications, of the ease of its technological transference, and of the economic saving that its use supposes. Although when the first article on the subject was published about 40 years ago it was met with resistance from certain academic sectors, time has shown that fuzzy sets constitute the nucleus of a doctrinal body of indubitable solidness, dynamism and international recognition which is known as soft computing.

It is precisely this dynamism which has lead us to reflect in this article on what the defining limits of soft computing are in an attempt to widen the range of its basic components with the inclusion of metaheuristics. This wider and more general perspective of soft computing allows the possibility of incorporating new and as yet undeveloped search/optimization methods (without any of the already explored methods being the protagonist), thereby avoiding the tendency indicated by Zadeh in [3] to proclaim the methodology in which we are interested to be the best (which, as Zadeh pointed out, is yet another version of the famous hammer principle which says that "When the only tool you have is a hammer, everything begins to look like a nail").

Referencias[1] Zadeh, L.A. (1965): Fuzzy Sets. Information and Control, 338-353.

[2] Zadeh, L.A. (1994). Soft Computing and Fuzzy Logic. IEEE Software 11, 6, 48-56.

[3] Zadeh, L.A. (2001): Applied Soft Computing. Applied Soft Computing 1, 1–2

[4] Li, X., Ruan, D. and van der Wal, A.J. (1998): Discussion on soft computing at FLINS'96. International Journal of Intelligent Systems, 13, 2-3, 287- 300.

[5] Bonissone, P (2002): Hybrid Soft Computing for Classification and Prediction Applications. Conferencia Invitada. 1st International Conference on Computing in an Imperfect World (Soft-Ware 2002), Belfast

[6] Verdegay, J.L. (2005): Una revisión de las metodologías que integran la "Soft Computing". Actas del Simposio sobre Lógica Fuzzy y Soft Computing (LFSC2005). Granada, 151-156

[7] Verdegay, J.L., Ed. (2003): Fuzzy Sets-based Heuristics for Optimization. Studies in Fuzziness. Springer Verlag

[8] Melián, B., Moreno Pérez, J.A., Moreno Vega, J.M. (2003): Metaheurísticas: Una visión global. Revista Iberoamericana de Inteligencia Artificial 19, 2, 7-28

[9] Cordón, O., F. Gomide, F. Herrera, F. Hoffmann, L. Magdalena (2004): Ten Years of Genetic Fuzzy Systems: Current Framework and New Trends. Fuzzy Sets and Systems 141:1, 5-31.

[10] Larrañaga, P., J.A. Lozano, H. Mühlenbein (2003): Algoritmos de estimación de distribuciones en problemas de optimización combinatoria. Inteligencia Artificial. Revista Iberoamericana de Inteligencia Artificial, 19(2), 149-168.

[11] Arenas, M.G., F. Herrera, M. Lozano, J.J. Merelo, G. Romero, A.M. Sánchez (Eds) (2005): Actas del IV Congreso Español sobre Metaheurísticas, Algoritmos Evolutivos y Bioinspirados (MAEB'05) I y II.

[12] Vergara-Moreno, E (1999): Nuevos Criterios de Parada en Algoritmos de Optimizacion. Tesis Doctoral. Universidad de Granada.

[13] Verdegay, J.L. y E. Vergara-Moreno (2000): Fuzzy Termination Criteria in Knapsack Problem Algorithms. Mathware and Soft Computing VII, 2-3, 89-97.

[14] Pelta, D.A. (2002): Algoritmos Heuristicos en Bioinformática (2002). Tesis Doctoral. Universidad de Granada.

[15] Blanco, A., D. Pelta y J.L. Verdegay (2002): A Fuzzy Valuation-based Local Search Framework for Combinatorial Problems. Fuzzy Optimization and Decision Making 1, 177-193.

[16] Cruz Corona, C. (2005): Estrategias cooperativas multiagentes basadas en Soft Computing para la solución de problemas de optimización. Tesis Doctoral. Universidad de Granada.

[17] Pelta, D.A., A. Sancho-Royo, C. Cruz y J.L. Verdegay: Using memory and fuzzy rules in a co-operative multi-thread strategy for optimization. Information Science (en prensa)

[18] Osman, I. H. and Laporte, G. (1996): Metaheuristic: A bibliography, Annals of Operations Research 63, 513-623.

[19] Voss S., Martello S., Osman I.H. and Rucairol C., Eds. (1999): Meta-Heuristics: Advances and Trends in Local Search Paradigms for Optimization, Kluwer Academic Publishers.

If you are at home reading this then you are most likely connected to the Internet. Whether it is by a wireless signal or physical Ethernet connection, you are a part of a network. Your home network – all computers, routers, modems, etc – is called a local area network (LAN).

If you are at home reading this then you are most likely connected to the Internet. Whether it is by a wireless signal or physical Ethernet connection, you are a part of a network. Your home network – all computers, routers, modems, etc – is called a local area network (LAN).